Table of Contents

How I Made My Own ADSB Exchange Clone In A Weekend

This is a work in progress!

Introduction

I've been promoting the hobby of ADS-B reception to the members of the Online Amateur Radio Community for a good 6 months now and quite a lot of people there do it, many of them for a number of years. We've had a great time optimising our systems and sharing useful tips for getting the most out of it. We also share news from the hobby, and one of the larger stories was - inevitably - the sale of ADSB Exchange to a private equity firm.

A lot of the folk behind this technology worked with ADSB Exchange and after the sale they decided to channel their efforts into other crowdsourced and open feeder networks. Repositories were cloned, names and URLs were find/replaced, and within a week or so there were four replacement project websites, all built off the open-source technology that powered ADSB Exchange.

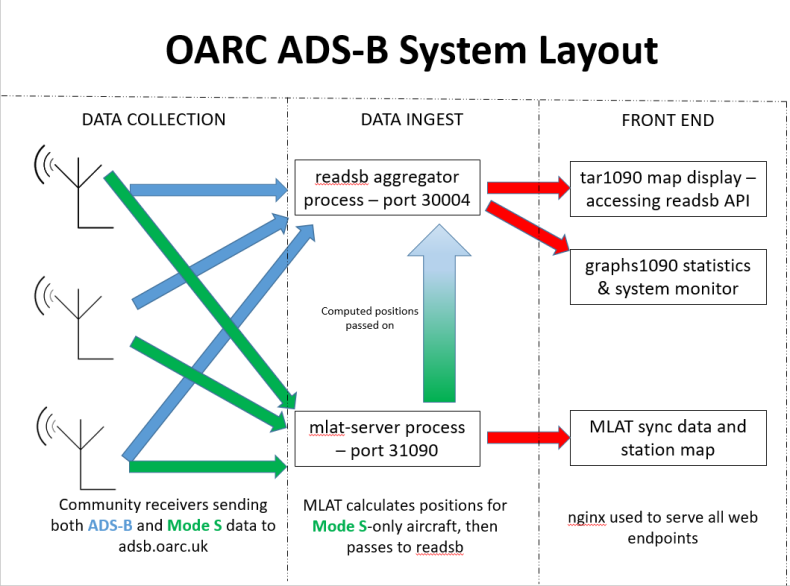

Spurred on by this, along with some comments on our club Discord about how it would be nice to see the data that OARC members specifically were contributing to various networks, I decided to embark on a project to create our very own ADS-B aggregator. This would be on a much smaller scale than the larger websites, but still require a fairly complex setup of many different components working together.

I've decided to document the process along the way and hopefully add something useful to the literature on the server side of ADS-B tracking setups. A lot of the open-source software available is well-documented on the client/feeder side, but not on the server side. This isn't necessarily something to be mad at. Those back and front end components took a lot of work to create and were made for a specific, required purpose. There was never any need or expectation to provide support for them.

The software, sadly, wasn't quite the turnkey experience for me that I think was mooted - the idea being that anyone could start their own network if something happened to a site. Our early testing resulted in errors with the containerisation setup that rendered the entire system unusable from the start. And that's fine: we hadn't got a clue what we were doing and we didn't code any of this stuff. So we had no idea what was being installed or how it fitted together.

What follows is a project log - written after the fact and hopefully not full of errors and omissions - on how I was able to get this all working over the course of a weekend on a donated VPS and provide the following services:

- A server that listens for ADS-B data from feeders

- A server that listens for MLAT data from feeders (if you want to use MLAT)

- A map system that displays live traffic, as well as a playback history of stored data on the system

- A map of MLAT feeders and their links with other stations with obfuscated positions for privacy

- Webpages that show sync data and links between MLAT peers in more detail

- Some basic performance graphs for monitoring system data and traffic stats

Minus some extra things like a status page for checking if you are feeding okay (which can be replaced with a single one line command on the user end) this is enough to build a small-scale flight-tracking experience, albeit not a containerised one and not one that will scale easily. This would be good for maybe a single area or country, or small-scale experiments.

System Description

The basic manual setup for all of this consists of the following things, installed in somewhat of a sequence, but you'll be jumping back and forth a lot when testing:

- OS setup (in this case, a fresh Debian VPS provided by Mark 2W0YMS which needed a few extra things adding)

- readsb (listens for ADS-B data)

- mlat-server (listens for MLAT data)

- tar1090 (web front-end)

- mlat-server-sync-map (displays web-based MLAT sync information map/data tables)

- Feeder setup scripts for easy installation by users (named however you want, we'll clone these from elsewhere and modify them and the other software along the way). This consists of readsb and mlat-client

I found it easier to clone all of these repos and push my own changes so that we had a single source for everything that could be replicated if needed in the future with minimal effort.

OS Setup

Not much to be said here, but I had to install git, curl, and a few other nice things so that I could actually use the box. We also need some extra users adding to work with, as well as some specific directory later, but we'll do that at each step. Changing the hostname is a good idea too.

I installed nginx as my web server choice at this point as most of the software used for this had example config files for it.

If you're wanting HTTPS - and you probably are - Certbot is worth installing, and you can find help for that in many places.

sudo apt install git nginx wget curl hostnamectl set-hostname <hostname>

A DNS record was pointed in my direction so people didn't have to remember an IP, but you don't have to do this.

readsb

Readsb needs to be compiled from source in order to run it properly as an aggregator rather than a local receiver display, mostly so it can write the files required for tar1090 to run in the proper “globe” mode with history playback. We will also omit the RTL-SDR stuff as we obviously won't need that.

A few prerequisites first…

sudo apt update

sudo apt install --no-install-recommends --no-install-suggests -y \

build-essential debhelper libusb-1.0-0-dev \

librtlsdr-dev librtlsdr0 pkg-config \

libncurses5-dev zlib1g-dev zlib1g

Then compile and install…

cd /tmp rm /tmp/readsb -rf git clone --depth 1 https://github.com/wiedehopf/readsb.git -b dev cd readsb rm -f ../readsb_*.deb export DEB_BUILD_OPTIONS=noddebs dpkg-buildpackage -b -ui -uc -us sudo dpkg -i ../readsb_*.deb

Configuration

This can be done by editing the config in /etc/default:

sudo nano /etc/default/readsb

There are numerous things configured here, and we will come back to it a few times:

RECEIVER_OPTIONS="" DECODER_OPTIONS="--write-json-every 1" NET_OPTIONS="--net --net-only --net-ingest --net-heartbeat 60 --net-ro-size 1250 --net-ro-interval 0.05 --net-bi-port 30004 --net-receiver-id ---tar1090-use-api --net-api-port unix:/run/readsb/api.sock" JSON_OPTIONS="--json-location-accuracy 2 --write-receiver-id-json"

You'll need to make some edits, remove some things and add some things. In reality any of the options can go into any of the four fields, but it helps to keep them somewhat organised. With this config we're:

- Enabling a network port for Beast data from feeders, 30004

- Enabling network mode only, and marking the process as an ingest node

- Removing ALL RTL-SDR configuration options

- Passing receiver IDs to the map for tracking how many are receiving a particular plane

- Disabling the maximum range beyond which a signal will be discarded, which isn't needed for an aggregator if you think about it

- Specifying a port for an API, so tar1090 can use that instead of manually reading the data from disk (better performance)

You can find out everything does in the –help - there are many options. Then we can restart readsb:

sudo systemctl restart readsb

You now have a server listening for data! Next we need to install the display side of things…

tar1090

We'll be installing tar1090 in the standard way that you would locally, but there are changes that need making to the readsb configuration in order to get the extra globe/history functions working. Install with this first:

sudo bash -c "$(wget -nv -O - https://github.com/wiedehopf/tar1090/raw/master/install.sh)"

Configuration

sudo nano /etc/default/tar1090

A couple of things to configure for tar1090 first. You may want to change both of these depending on your incoming data level and what features you want to provide for site visitors:

# Time in seconds between snapshots in the track history INTERVAL=8 # hours of tracks that /?pTracks will show PTRACKS=24

Now we'll make the required changes to the readsb config:

sudo nano /etc/default/readsb

And in one of the fields add this, then save and exit:

--write-json-globe-index --write-globe-history /var/globe_history --heatmap 30 --db-file /usr/local/share/tar1090/aircraft.csv.gz

Download that referenced CSV file of aircraft types to the tar1090 directory:

sudo wget -O /usr/local/share/tar1090/aircraft.csv.gz https://github.com/wiedehopf/tar1090-db/raw/csv/aircraft.csv.gz

Finally we need to make a directory to hold the history files and make it readable by readsb:

sudo mkdir /var/globe_history sudo chown readsb /var/globe_history

Then restart both readsb and tar1090:

sudo systemctl restart tar1090 sudo systemctl restart readsb

nginx

When installing tar1090 it should have given you an example config for nginx. We'll go and add that configuration now and change anything we need to, as well as completing the setup so the readsb API is accessible to tar1090.

Inside your server configuration check that the tar1090 config is being included from /usr/local/share/tar1090 and if you use HTTPS don't forget you need it in BOTH sections:

server {

listen 80 default_server;

listen [::]:80 default_server;

include /usr/local/share/tar1090/nginx-tar1090.conf;

Rest of config below...

Now we need to include the relevant block to get readsb API access working, which is here: https://github.com/wiedehopf/tar1090/blob/master/nginx-readsb-api.conf - I just copied the block into my tar1090 config file referenced above in /usr. Inside this file you will also see two new options to add to your readsb config file as well, so open /etc/default/readsb and enter –tar1090-use-api and –net-api-port unix:/run/readsb/api.sock into perhaps the NET_OPTIONS section.

At this point you should probably restart everything:

sudo systemctl restart nginx sudo systemctl restart readsb sudo systemctl restart tar1090

Your server side is - hopefully - complete!

Feeder clients

The feeder clients for both the ADS-B feed and MLAT (if you want to use it - see below) were cloned from the versions available here and basically all I did was change every single reference to “adsbfi” throughout the codebase to “oarc-adsb”. This wasn't a very fun process and basically required me to manually go through every single source file. But the end result was a package that automatically installs two clients on feeder systems, named appropriately - you'd pick your own name here. This process is also where you pre-configure this software to connect to your server when people install it. Clone the repos from adsbfi's to your own and make sure things get downloaded from there. Then you can edit things to your hearts content on your own forks.

Your feeder clients should, ideally, be exposing Beast data from their SDR on port 30005, and they can do this with dump1090 and readsb, but readsb has better features and everyone should probably use that. You can direct them to the readsb repository and automatic install scripts to get them going.

So, inside the configure.sh file you can change the configuration near the bottom and include the server IP/hostname and the port you chose earlier when setting up readsb. Leave MLATSERVER blank for now, and you can leave the RESULTS lines out also.

MLATSERVER="" TARGET="--net-connector <your server address>,<your server port>,beast_reduce_plus_out" NET_OPTIONS="--net-heartbeat 60 --net-ro-size 1280 --net-ro-interval 0.2 --net-ro-port 0 --net-sbs-port 0 --net-bi-port-0 --net-bo-port 0 --net-ri-port 0 --write-json-every 1 --uuid-file /usr/local/share/<app name>/<app name>-uuid" JSON_OPTIONS="--max-range 450 --json-location-accuracy 2 --range-outline-hours 24"

The best message format to use is probably beast_reduce_plus_out as this sends everyone's proper user IDs and not a hashed one. This enables more accurate feeder counts, latency measurements and some other things I don't fully understand relating to cool things that readsb can do.

So, clone the repo, make your changes and push them to the remote, and then send folk to your GitHub repo to install the feeder clients.

If you decide not to go with MLAT and you are feeling brave you can edit the scripts to remove all references to MLAT, or just have people use 0 for an MLAT username on install to disable it.

MLAT

After this you have a choice. If you want to enable MLAT you can carry on with the below. If not you can safely stop here… your website is complete! Otherwise…

The key thing here is to have the mlat-server running and listening for data on a specific port (which we'll configure feeders to send to later). 31090 is the default for this. The calculated positions and results need sending onto the aggregator readsb process so they show up on the aggregated map, and so the server readsb needs setting up to expect an input with this data on the right port, which can be any free port on your server.

After this server side work you should also configure your feeder clients to send MLAT data to the server, and also have them forward any MLAT results sent back from your MLAT server to your website's ADS-B client and the local feeder readsb client, so that they can be passed on to the local readsb process and show up on a feeder's local map. You need to choose a port for this on feeders' local systems that doesn't conflict with the same port used on users' systems by ANY other aggregator website.

MLAT Server

This was a pain to do as I couldn't get the Python VENV running in /opt/mlat-python-env/ under a new nologin account that I'd set up. I had to get this running on my own username. Not ideal. Maybe you know what you're doing

First, follow the instructions here to install the mlat-server: https://github.com/wiedehopf/mlat-server

You could make this a systemd service if you wanted. That made it easy to start and stop it as needed.

You also need to choose a port to feed results back to the readsb process on. We're gonna use SBS basestation format, remember that for later. Add this to your mlat-server command line:

--basestation-connect localhost:results_port

Bring up the mlat-server per the instructions.

readsb configuration change

Now we need to let readsb know to open a port to receive these calculated positions. In /etc/default/readsb change the –net-sbs-in-port from 0 to 1 less than the one you specified in the mlat-server configuration. The various ingest ports will be assigned from that one going up and the next one along is the MLAT results port. Odd way of doing it, but that was suggested by the software author.

Restart the readsb service.

MLAT Client

We're gonna fill in that MLATSERVER line now, along with a few other things. Inside MLATSERVER enter the “server_hostname:31090” that you set up your mlat-server on earlier. After the UAT_INPUT line add the following:

RESULTS="--results beast,connect,127.0.0.1:30104" RESULTS2="--results basestation,listen,whatever_port" RESULTS3="--results beast,listen,30157" RESULTS4="--results beast,connect,127.0.0.1:results_port"

Results 2 and 3 seem redundant (edit: 3 seems to be ADSB Exchange related…), but the first one connects to your local readsb process and sends on any MLAT results, and the last line is used as a connection between your website's MLAT client and ADS-B client. The ADS-B tracker experience is very much based on data pipelines with all sorts of interconnections, and the diagram further back up the page shows most of them.

RESULTS4 therefore needs to be set to the port you picked earlier that doesn't conflict with any other aggregator website's MLAT results port.

One other thing we have to do is tell the mlat-client where to look for your site's UUID when it's being compiled by a user. This is best done by cloning the mlat-client repo and then changing the “mlat-client” file in the root of it. Here's the relevant line, 105:

uuid_path = [ '/usr/local/share/xxxx/xxxx-uuid', '/boot/xxxx-uuid' ]

Change the right bits to your client's install folder in /usr/local/share/

So, make changes to your clients, push them to your repos, and then let your feeders loose on them to reinstall everything including MLAT.

That's it! You're done! When you have enough positional data you'll get MLAT tracks on your aggregator website, plus that data will be sent back to your feeders.

Extras

graphs1090

This one is easy peasy. Use the install script on the graphs1090 repo to install it: https://github.com/wiedehopf/graphs1090

Then change any configuration options you like, and maybe edit the index.html to get rid of any of the graphs you don't want. Gain would be good to lose as it is totally useless, for example. Then restart the service and make sure your ngnix config has a line pointing to the right place to make it accessible.

MLAT sync map and table

Very optional https://github.com/wiedehopf/mlat-server-sync-map

Stats API

This bit was hard and I just picked adsb.lol's setup and edited it a lot: https://github.com/adsblol/infra